Industrial robots are everywhere: what happens if they get compromised? Is this hard? Are they attractive for attackers? How can we improve their security? To answer these questions, last year we studied the security landscape of an industrial robot and we analysed (and compromised) a widespread robot.

By Marcello Pogliani

PhD student at the NECSTLab, working on Systems Security

Industrial robots are drastically evolving: on one side, “caged” giant robots are being complemented by smaller, “collaborative” models designed to share the workspace with human workers; on the other side, they are more “intelligent”, for example, by means of an improved interconnection for tasks such as remote maintenance, and integration with information systems. This means that robots, once “air-gapped”, are now exposed to hostile avenues. What happens (Skynet aside) if attackers compromise them?

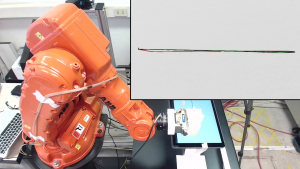

To answer this question, thanks to our colleagues at the MERLIN lab, we studied their ABB IRB 140 robot with its IRC5 controller. We started by reverse engineering the software running on this robot controller, and we found a few vulnerabilities and design issues. By exploiting them, we were able to remotely control the robot. Although ABB promptly fixed the specific vulnerabilities we found (thanks!), we used the capabilities of this robot and our level of access as a case study to understand what can happen if an attacker does exactly what we did.

Robots must accurately “read” from the physical world through sensors and “write” through actuators, refuse to execute self-damaging control logic, and never harm humans. Once compromised a robot, we can violate such “laws”: We can stealthily change critical parameters hidden in obfuscated configuration. By doing so, we can reduce the accuracy of the robot’s movement (e.g., soldering or cut), introducing micro-defects and subtly damaging the production, leading to economic and\or reputation damages. By tweaking the robot’s calibration, we can make the controller “unstable”, damaging the robot—after all, exploiting a software vulnerability in a cyber-physical system such as a robot has immediate physical consequences! We can also alter the information displayed to the operator, for example by showing that the robot is in “manual mode” when, instead, it is in “automatic mode”: the operator may think that it’s safe to perform a certain action, when, instead, it isn’t. While standard-mandated safeguards ensure that the robot is safe for the operator even in this case, we show that this attack can have important consequences anyway.

It is still not clear how can an attacker connect to the robot. Robots are usually not Internet-exposed. However, many robots are indirectly connected through “industrial routers”—bridges between a robot, or another industrial device like a PLC, and the Internet. Just by searching on shodan.io, we found 80,000 Internet-connected industrial routers: 5,000 of them had authentication disabled. Network access aside, there’s always physical access, or the compromise of a computer or device that will be connected, at a later stage, to the robot. Given the concrete attack vectors and scenarios with critical consequences, and given that robots are not isolated from their adversaries, we thus call for a greater security awareness in industrial robotics, and for considering the identified attack scenarios when designing and deploying robots.

Curious about the details of our research? Head over to the project page, read the article published at the 38th IEEE Symposium on Security and Privacy, or watch our talk at Black Hat USA 2017.

Note: this research is a collaborative effort between Davide Quarta (PhD Student at the NECSTLab), myself, Mario Polino (Postdoctoral Researcher at the NECSTLab), Federico Maggi (Senior Researcher at Trend Micro), Andrea Zanchettin (Assistant Professor at the MERLIN Lab) and Stefano Zanero (Associate Professor at the NECSTLab).