Domain Specific Languages are gaining more and more interest thanks to the significant level of performance they can reach on different architectures. FROST is a common backend able to accelerate on FPGA applications developed in different DSLs.

By Emanuele Del Sozzo

Ph.D. student @ Politecnico di Milano

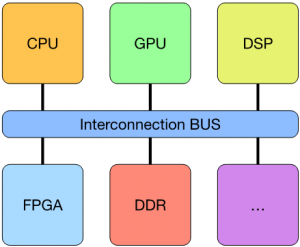

Due to the reaching of the end of Dennard scaling and Moore’s law, we are experiencing a growing interest towards Heterogeneous System Architectures (HSAs) as a promising solution to boost performance and, at the same time, reduce power consumption. The combination of different hardware accelerators, like GPUs, FPGAs, and ASICs, along with CPUs, allows to choose the most suitable architecture for a specific task, and, for this reason, many high-performance systems are currently taking advantage of heterogeneity.

Among such architectures, FPGAs are a good candidate for high performance computations since they provide a good trade-off in terms of performance and power consumption, as well as a high level of flexibility. Moreover, the possibility to configure FPGAs to implement a custom architecture (for instance, in terms of data precision) permits to tailor it to the target computation. However, the major flaw of FPGAs is their hard programmability and steep learning curve.

Although High-Level Synthesis (HLS) tools are easing the implementation of algorithms on FPGAs, the design process is still complex. An emerging solution to this problem is to write algorithms in a Domain Specific Language (DSL) and to let the DSL compiler generate efficient code targeting FPGAs.

Proposed approach

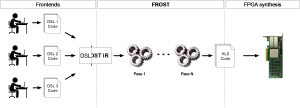

Within this context, we designed FROST, a unified backend that enables DSL compilers to target FPGA architectures. A DSL compiler can generate the FROST Intermediate Representation (IR) and use FROST to generate efficient HLS code to target FPGA. FROST leverages a scheduling co-language to specify FPGA specific optimizations (loop pipelining, unrolling, vectorization) as well as the type of communication with the off-chip memory. After applying the transformations on the IR, FROST generates a C/C++ code suitable for HLS tools. Finally, the outcome of HLS phase is synthesized and implemented on FPGA using a synthesis toolchain.

Given that many FROST optimizations require higher level loop nest transformations (e.g., vectorization requires the loops to be split), FROST is designed to integrate well with higher level loop nest transformation framework (such as

the Halide mid-level compiler and the Tiramisu optimization framework). These frameworks are a layer between DSL languages and the FPGA code generation layer. This separation allows FROST to fully focus on efficient FPGA code generation and to leave loop transformations that are architecture-independent to higher level frameworks.

Experimental results summary

We evaluated FROST with multiple image processing applications designed in TIRAMISU, and compared the results against the Vivado HLS Video Library, which provides already optimized image processing kernels. Thanks to the flexibility and optimizations available both in FROST and TIRAMISU, we outperformed the Video Library implementations by at least 3X.

This work was developed during my research visit at MIT, where I collaborated with the Commit group, under the supervision of Prof. Saman Amarasinghe. An initial version of this work was presented at ICCD 2017 conference held in Boston.

Emanuele Del Sozzo on LinkedIn